Tao Lei

Tao Lei, PhD is a Research Leader and Scientist at ASAPP leading an applied research team for natural language processing (NLP) and machine learning. Prior to joining ASAPP, Dr. Lei received his PhD from MIT in 2017, where he was advised by Prof. Regina Barzilay. Dr. Lei’s research interest lies within the algorithmic perspective of machine learning and applications to NLP.

Reducing the high cost of training NLP models with SRU++

Natural language models have achieved various groundbreaking results in NLP and related fields [1, 2, 3, 4]. At the same time, the size of these models have increased enormously, growing to millions (or even billions) of parameters, along with a significant increase in the financial cost.

The cost associated with training large models limits the research communities ability to innovate, because a research project often needs a lot of experimentation. Consider training a top-performing language model [5] on the Billion Word benchmark. A single experiment would take 384 GPU days (6 days * 64 V100 GPUs, or as much as $36,000 using AWS on-demand instances). That high cost of building such models hinders their use in real-world business, and makes monetization of AI & NLP technologies more difficult.

Our model obtains better perplexity and bits-per-character (bpc) while using 2.5x-10x less training time and cost compared to top-performing Transformer models. Our results reaffirm the empirical observations that attention is not all we need.

- Tao Lei, Research Leader and Scientist, ASAPP

The increasing computation time and cost highlight the importance of inventing computationally efficient models that retain top modeling power with reduced or accelerated computation.

The Transformer architecture was proposed to accelerate model training in NLP. Specifically, it is built entirely upon self-attention and avoids the use of recurrence. The rationale of this design choice, as mentioned in the original work, is to enable strong parallelization (by utilizing the full power of GPUs and TPUs). In addition, the attention mechanism is an extremely powerful component that permits efficient modeling of variable-length inputs. These advantages have made Transformer an expressive and efficient unit, and as a result, the predominant architecture for NLP.

A couple of interesting questions arises following the development of Transformer:

- Is attention all we need for modeling?

- If recurrence is not a compute bottleneck, can we find better architectures?

SRU++ and related work

We present SRU++ as a possible answer to the above question. The inspiration of SRU++ comes from two lines of research:

First, previous works have tackled the parallelization/speed problem of RNNs and proposed various fast recurrent networks [7, 8, 9, 10]. Examples include Quasi-RNN and Simple Recurrent Unit (SRU), both are highly-parallelizable RNNs. The advance eliminates the need of eschewing recurrences to trade training efficiency.

Second, several recent works have achieved strong results by leveraging recurrence in conjunction with self-attention. For example, Merity (2019) demonstrated a single-headed attention LSTM (SHA-LSTM) is sufficient to achieve competitive results on character-level language modeling task while requiring significantly less training time. In addition, RNNs have been incorporated into Transformer architectures, resulting in better results on machine translation and natural language understanding tasks [8, 12]. These results suggest that recurrence and attention are complementary at sequence modeling.

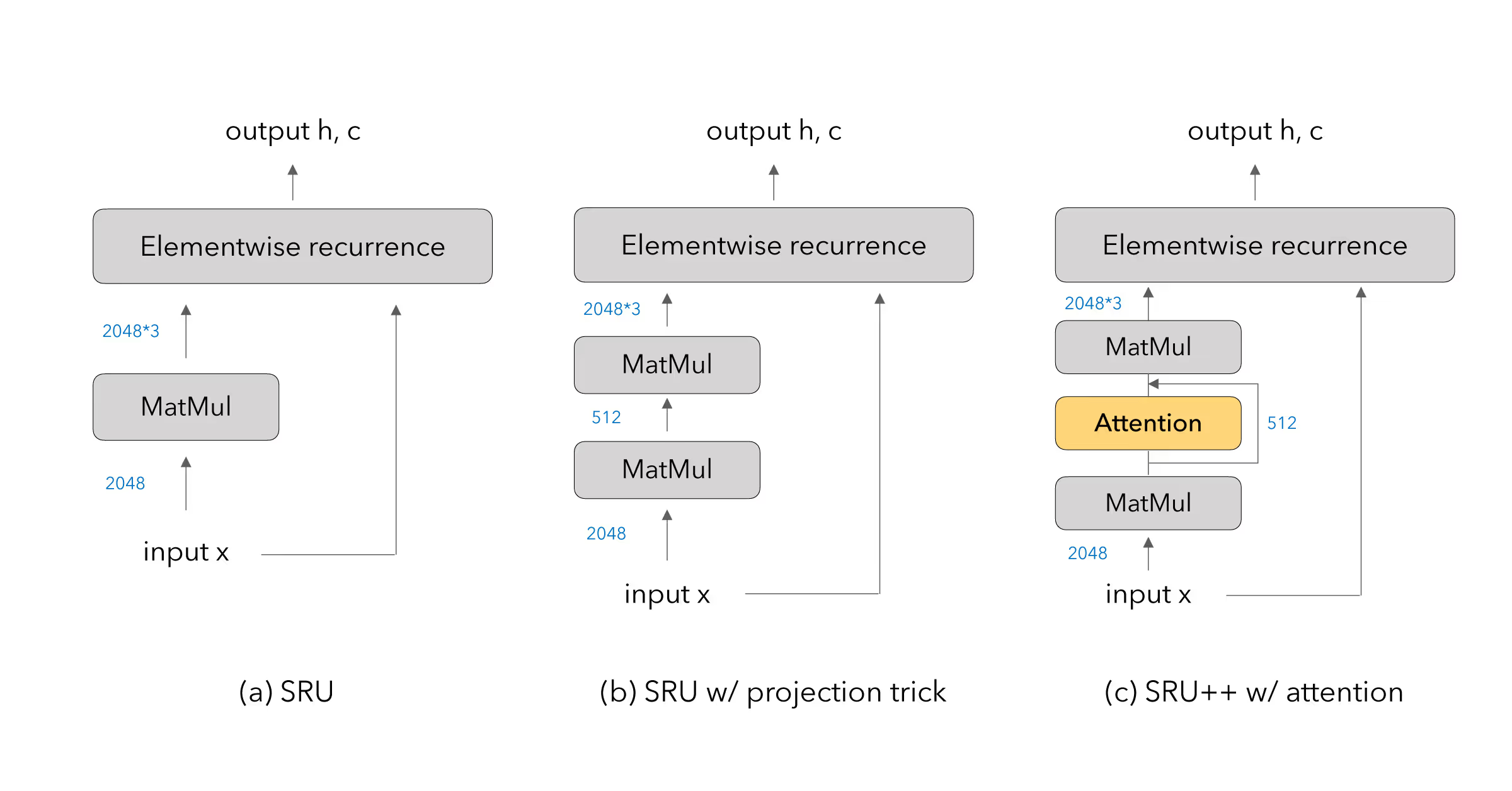

In light of the previous research, we enhance the modeling capacity of SRU by incorporating self-attention as part of the architecture. A simple illustration of the resulting architecture SRU++ is shown in Figure 1c.

SRU++ replaces the linear mapping of the input (Figure 1a) by first projecting the input into a smaller dimension. An attention operation is then applied, followed by a residual connection. The dimension is projected back to the hidden size needed by the elementwise recurrence operation of SRU. In addition, not every SRU++ layer needs attention. When the attention is disabled in SRU++, the network reduces to a SRU variant using dimension reduction to reduce the number of parameters (Figure 1b).

Results

1. SRU++ is a highly-efficient neural architecture

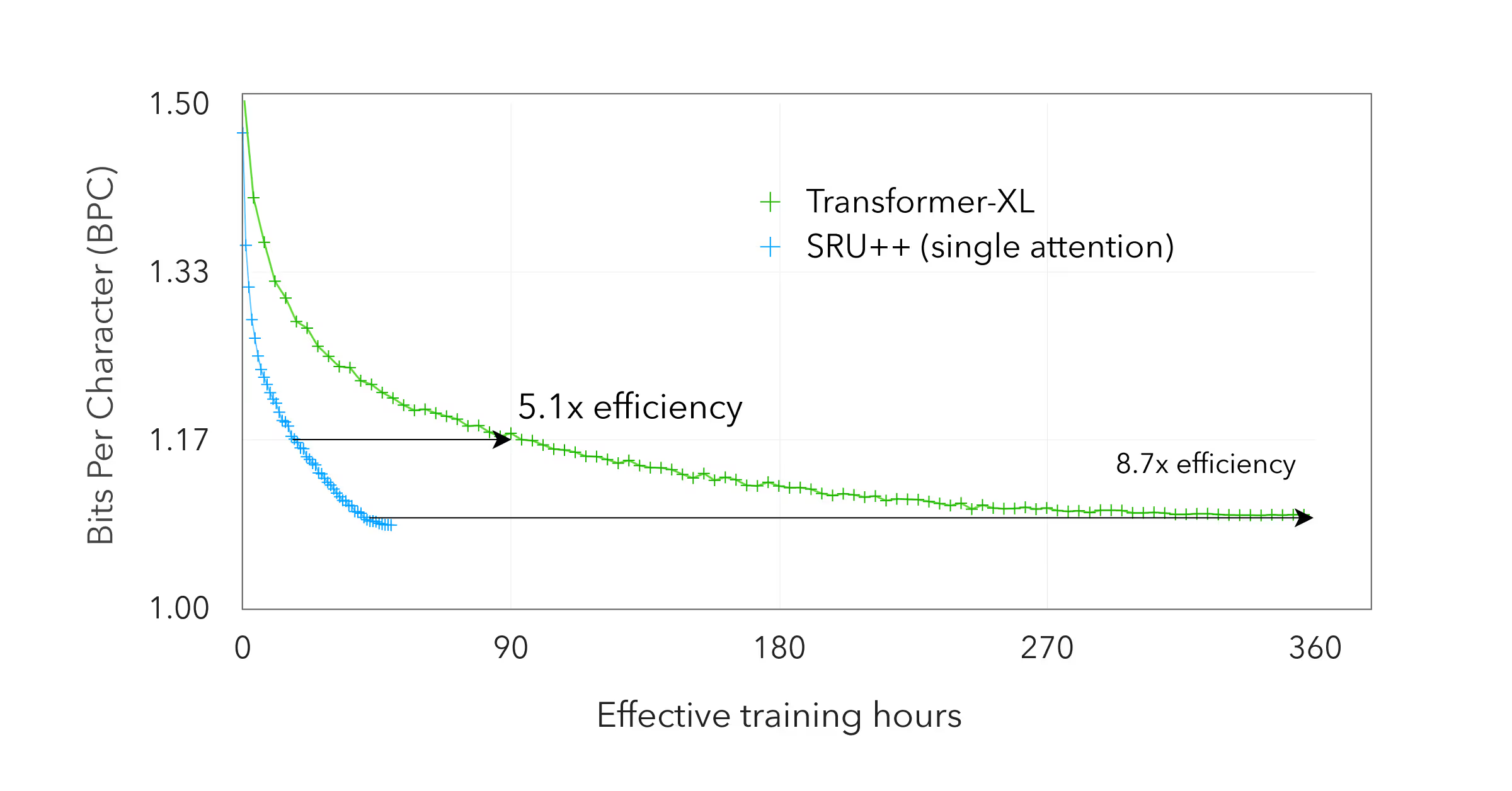

We evaluate SRU++ on several language modeling benchmarks such as Enwik8 dataset. Compared to Transformer models such as Transformer-XL, SRU++ can achieve similar results using only a fraction of the resources. Figure 2 compares the training efficiency between the two with directly comparable training settings. SRU++ is 8.7x more efficient to surpass the dev result of Transformer-XL, and 5.1x more efficient to reach a BPC (bits-per-character) of 1.17.

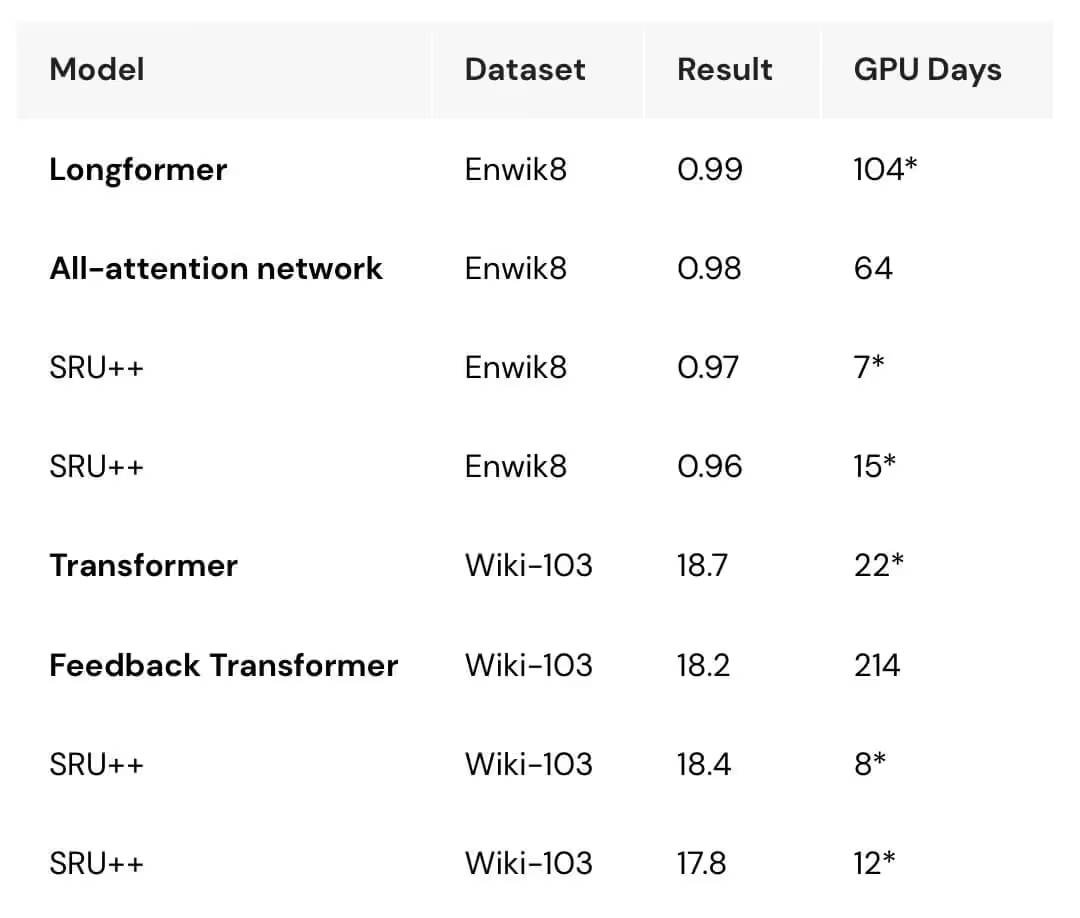

Table 1 further compares the training cost of SRU++ and reported costs of leading Transformer-based models on Enwik8 and Wiki-103 datasets. Our model can achieve over 10x cost reduction while still outperforming the baseline models on test perplexity or BPC.

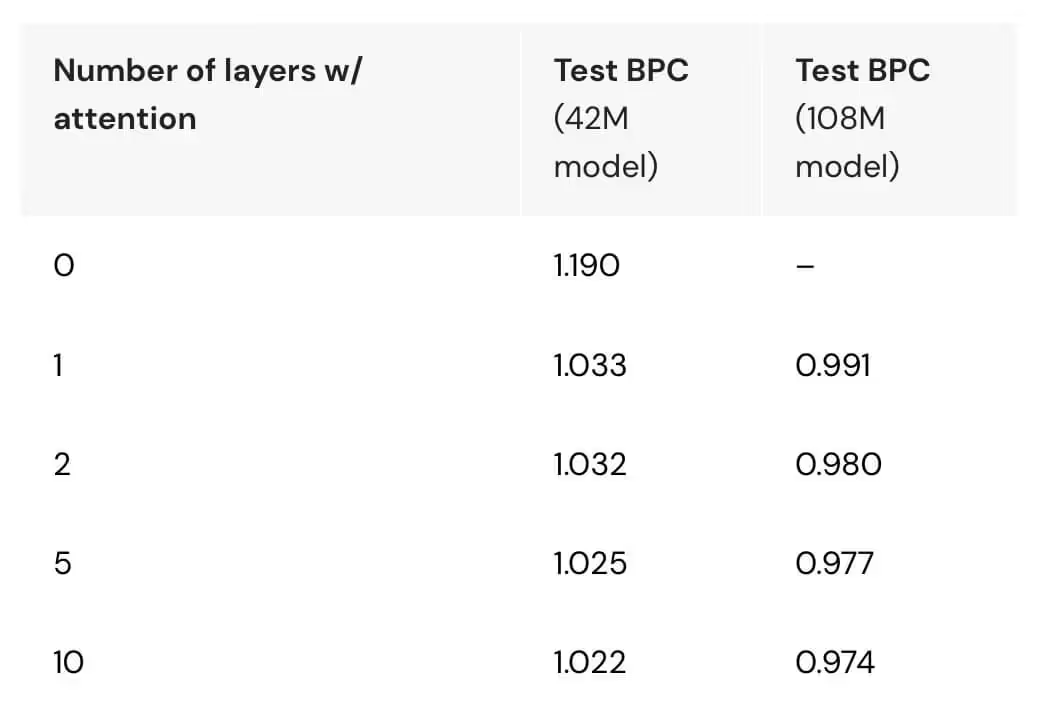

2. Little attention is needed given recurrence

Similar to the observation of Merity (2019), we found using a couple of attention layers sufficient to obtain state-of-the-art results. Table 2 shows an analysis by only enabling the attention computation every k layers of SRU++.

Conclusion

We present a recurrent architecture with optional built-in self-attention that achieves leading model capacity and training efficiency. We demonstrate that highly expressive and efficient models can be derived using a combination of attention and fast recurrence. Our results reaffirm the empirical observations that attention is not all we need, and can be complemented by other sequential modeling modules.

For further reading, ASAPP also conducts research to reduce the cost of model inference. See our published work on model distillation and pruning for example.