There’s been a huge explosion in large language models (LLMs) over the past two years. It can be hard to keep up – much less figure out where the real value is happening. The performance of these LLMs is impressive, but how do they deliver consistent and reliable business results?

OpenAI demonstrated that very, very large models, trained on very large amounts of data, can be surprisingly useful. Since then, there’s been a lot of innovation in the commercial and open-source spaces; it seems like every other day there’s a new model that beats others on public benchmarks.

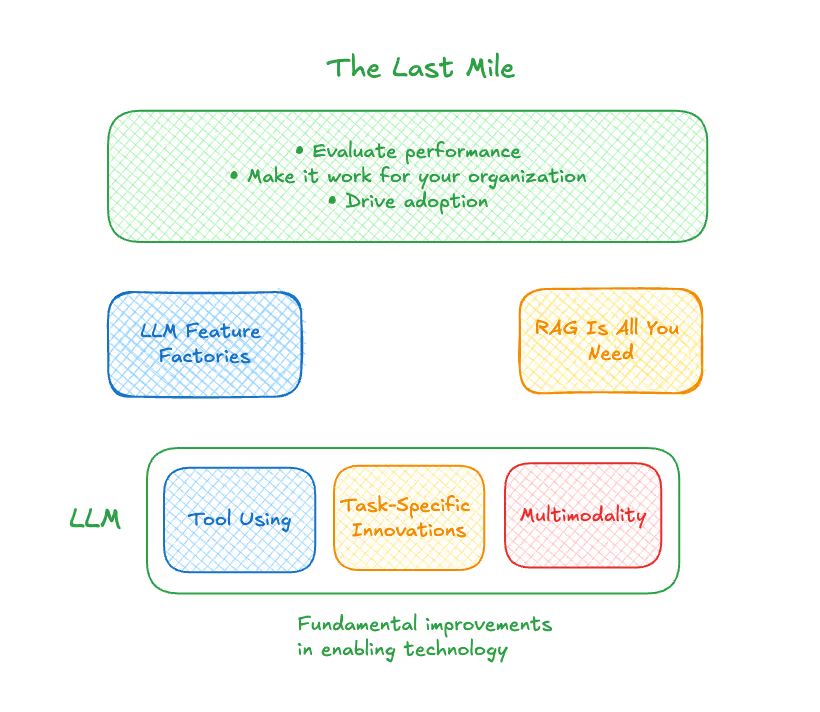

These days, most of the innovation in LLMs isn’t even really coming from the language part. It’s coming in three different places:

- Tool use: That’s the ability of the LLM to basically call functions. There’s a good argument to be made that tool use defines intelligence in humans and animals, so it’s pretty important.

- Task-specific innovations: This refers to innovations that deliver considerable improvements in some kind of very narrow domain, such as answering binary questions or summarizing research data.

- Multimodality: This is the ability of models to incorporate images, audio, and other kinds of non-text data as inputs.

New capabilities – and challenges

Two really exciting things emerge out of these innovations. First, it’s much easier to create prototypes of new solutions – instead of needing to collect data to make a Machine Learning (ML) model, you can just write a specification, or in other words, a prompt. Many solution providers are jumping on that quick prototyping process to roll out new features, which simply “wrap” a LLM and a prompt. Because the process is so fast and inexpensive, these so-called “feature factories” can create a bunch of new features and then see what sticks.

Second, making LLMs useful in real time relies on the LLM not using its “intrinsic knowledge” – that is, what it learned during training. It is more valuable instead to feed it contextually relevant data, and then have it produce a response based on that data. This is commonly called retrieval augmented generation, or RAG. So as a result, there are many companies making it easier to put your data inside the LLM – connecting it to search engines, databases, and more.

The thing about these rapidly developed capabilities is that they always place the burden of making the technology work on you and your organization. You need to evaluate if the LLM-based feature works for your business. You need to determine if the RAG-type solution solves a problem you have. And you need to figure out how to drive adoption. How do you then evaluate the performance of those things? And how many edge cases do you have to test to make sure it is dependable?

This “last mile” in the AI solution development and deployment process costs time and resources. It also requires specific expertise to get it right.

Getting over the finish line with a generative AI agent

High-quality LLMs are widely available. Their performance is dramatically improved by RAG. And it’s easier than ever to spin up new prototypes. That still doesn’t make it easy to develop a customer-facing generative AI solution that delivers reliable value. Enabling all of this new technology - namely, LLMs capable of using contextually relevant tools at their disposal - requires expertise to make sure that it works, and that it doesn’t cause more problems than it solves.

It takes a deep understanding of the performance of each system, how customers interact with the system, and how to reliably configure and customize the solution for your business. This expertise is where the real value comes from.

Plenty of solution providers can stand up an AI agent that uses the best LLMs enhanced with RAG to answer customers’ questions. But not all of them cover that last mile to make everything work for contact center purposes, and to work well, such that you can confidently deploy it to your customers, without worrying about your AI agent mishandling queries and causing customer frustration.

Generative AI services provided by the major public cloud providers can offer foundational capabilities. And feature factories churn out a lot of products. But neither one gets you across the finish line with a generative AI agent you can trust. Developing solutions that add value requires investing in input safety, output safety, appropriate language, task adherence, solution effectiveness, continued monitoring and refining, and more. And it takes significant technical sophistication to optimize the system to work for real consumers.

That should narrow your list of viable vendors for generative AI agents to those that don’t abandon you in the last mile.