The term “hallucination” has become both a buzzword and a significant concern. Unlike traditional IT systems, Generative AI can produce a wide range of outputs based on its inputs, often leading to unexpected and sometimes incorrect responses. This unpredictability is what makes Generative AI both powerful and risky. In this blog post, we will explore what hallucinations are, why they occur, and how to ensure that AI responses are safely grounded to prevent these errors.

What is a hallucination in generative AI?

Definition and types of hallucinations

In the context of generative AI, a hallucination refers to an output that is not grounded in the input data or the knowledge base the AI is supposed to rely on. Hallucinations can be broadly categorized into two types:

- Harmless Hallucinations: These are errors that do not significantly impact the user experience or the integrity of the information provided. For example, an AI might generate a slightly incorrect but inconsequential detail in a story.

- Harmful Hallucinations: These are errors that can mislead users, compromise brand safety, or result in incorrect actions. For instance, an AI providing incorrect medical advice or financial information falls into this category.

Two axes of hallucinations

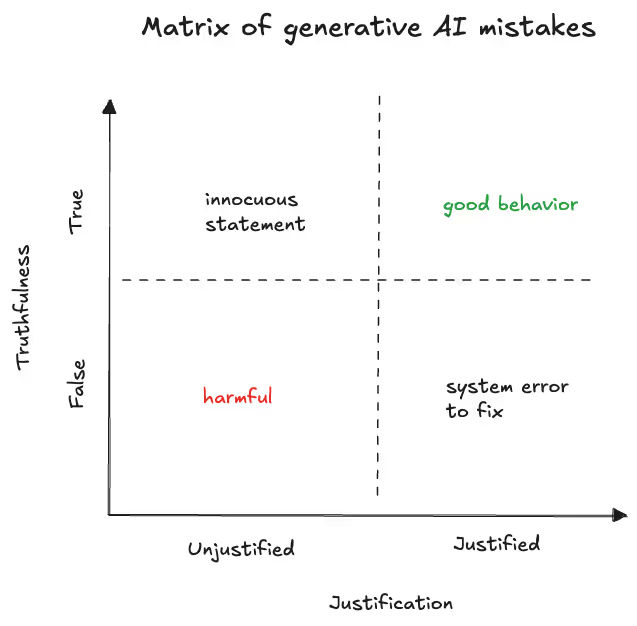

To better understand hallucinations, we can consider two axes. Justification - whether the AI had information indicating that its statement was true. And truthfulness - whether the statement was actually true.

Based on these axes, we can classify hallucinations into four categories:

- Justified and True: The ideal scenario where the AI’s response is both correct and based on available information.

- Justified but False: The AI’s response is based on outdated or incorrect information. This can be fixed by improving the information available. For example, if an API response is ambiguous, it can be updated so that it is clear what it is referring to.

- Unjustified but True: The AI’s response is correct but not based on the information it was given. For example, the AI telling the customer that they should arrive at an airport 2 hours before a flight departure. If this was not grounded in, say, a knowledge base article, then this information is technically a hallucination even if it is true.

- Unjustified and False: The worst-case scenario where the AI’s response is both incorrect and not based on any available information. These are harmful hallucinations that could require an organization to reach out to the customer to fix the mistake.

Why do hallucinations occur with generative AI?

Hallucinations in generative AI occur due to several reasons. Generative models are inherently stochastic, meaning they can produce different outputs for the same input. Additionally, the large output space of these models increases the likelihood of errors, as they are capable of generating a wide range of responses. AI systems that rely on incomplete or outdated data are also prone to making incorrect statements. Finally, the complexity of instructions can result in misinterpretation, which may cause the model to generate unjustified responses.

Hallucination prevention and management

We typically think about four pillars when it comes to preventing and managing hallucinations:

- Preventing hallucinations from occurring in the first place

- Catching hallucinations before they reach the customer

- Tracking hallucinations post-production

- Improving the system

1. Preventing hallucinations: Ensuring responses are properly grounded

One of the most effective ways to prevent hallucinations is to ensure that AI responses are grounded in reliable data. This can be achieved through:

- Explicit Instructions: Providing clear and unambiguous instructions can help the AI interpret and respond accurately.

- API Responses: Integrating real-time data from APIs ensures that the AI has access to the most current information.

- Knowledge Base (KB) Articles: Relying on a well-maintained knowledge base can provide a solid foundation for AI responses.

2. Catching hallucinations: Approaches to catch hallucinations in production

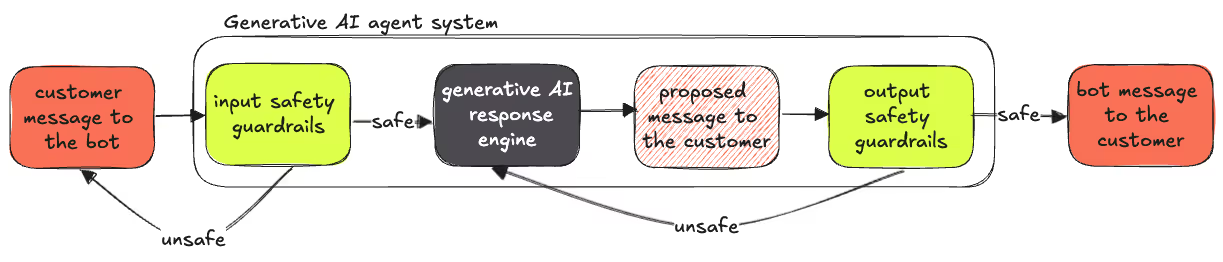

To minimize the risk of hallucinations, several safety mechanisms can be implemented:

- Input Safety Mechanisms: Detecting and filtering out abusive or out-of-scope requests before they reach the AI system can prevent inappropriate responses.

- Output Safety Mechanisms: Assessing the proposed AI response for safety and accuracy before it is delivered to the user. This can involve:

- Deterministic Checks using rules-based systems to ensure that certain types of language or content are never sent to users, or

- Classification Models, which employ machine learning models to classify and filter out potentially harmful responses. For example, a model can classify whether the information contained in a proposed response has been grounded on information retrieved from the right data sources. If this model suspects that the information is not grounded, it can reprompt the AI system to try again with this feedback.

3. Tracking hallucinations: post-production

A separate post-production model can be used to classify AI responses as mistakes in more detail. While the “catching hallucination” model should balance effectiveness with latency, the post-production mistake monitoring model can be much larger, as latency is not a concern.

A well-defined hallucination taxonomy is crucial for systematically identifying, categorizing, and addressing errors in Generative AI systems. By having a well-defined error taxonomy system, users can aggregate reports that make it easy to identify, prioritize, and resolve issues quickly.

The following categories help identify the type of error, its source of misinformation, and the impact.

- Error Category -broad classification of types of the generative AI system errors.

- Error Type - specific nature or cause of an AI system error.

- Information Source - origin of data used by the AI system.

- System Source - component responsible for generating or processing AI output.

- Customer Impact Severity - level of negative effect on the customer.

4. Improving the system

Continuous improvement is crucial for managing and reducing hallucinations in AI systems. This involves several key practices. Regular updates ensure that the AI system is regularly updated with the latest data and information. Implementing feedback loops allows for the reporting and analysis of errors, which helps improve the system over time. Regular training and retraining of the AI model are essential to enable it to adapt to new data and scenarios. Finally, human oversight involving contact center supervisors to review and correct AI responses, especially in high-stakes situations, is critical.

Conclusion

By understanding the nature of hallucinations and implementing robust mechanisms to prevent, catch, and manage them, organizations can harness the power of Generative AI while minimizing risks. Just as human agents in contact centers are managed and coached to improve performance, Generative AI systems can also be continually refined to ensure they deliver accurate and reliable responses. By focusing on grounding responses in reliable data, employing safety mechanisms, and fostering continuous improvement, we can ensure that AI responses are safely grounded and free from harmful hallucinations.

.avif)

%2520(2).png)