Operations teams have been using agent handle time (AHT) to measure agent efficiency, manage workforce, and plan operation budgets for decades. However, customers have been increasingly demonstrating they’d prefer to communicate asynchronously—meaning they can interact with agents when it is convenient for them, taking a pause in the conversation and seamlessly resuming minutes or hours later, as they do when multitasking, handling interruptions, and messaging with family and friends.

In this new asynchronous environment, AHT is an inappropriate measure of how long it takes agents to handle a customer’s issue: it overstates the amount of time an agent spends working with a customer. Rather, we consider agent throughput as a better measure of agent efficiency. Throughput is the number of issues an agent handles over some period of time (e.g. 10 issues per hour) and is a better metric for operations planning.

One common strategy for increasing throughput is to merely give agents more issues to handle at once, which we call concurrency. However, attempts to increase throughput by simply increasing an agent’s concurrency without giving them better tools to handle multiple issues at once are short-sighted. Issues that escalate to agents are complex and require significant cognitive load, as “easier” issues have typically already been automated.

Therefore, naively increasing agent concurrency without cognitive load consideration often results in adverse effects on agent throughput, frustrated customers who want faster response times, and agents who burn out quickly.

The ASAPP solution to this is to use an AI-powered flexible concurrency model. A machine learning model measures and forecasts the cognitive demand on agents and dynamically increases concurrency in an effective way. This model considers several factors including customer behaviors, the complexities of issues, and expected work required to resolve the issue to determine an agent’s concurrency capacity at a given point in time.

We’re able to increase throughput by reducing demands on the agent’s time and cognitive load, resulting in agents more efficiently handling conversations, while elevating the customer experience.

Measuring throughput

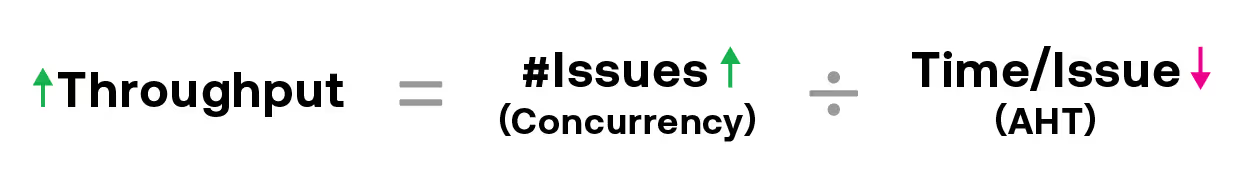

In equation form, throughput is the inverse of agent handle time (AHT) multiplied by the number of issues an agent can concurrently handle at once.

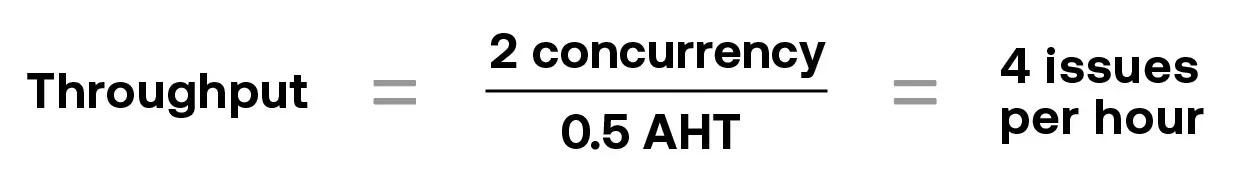

For example, if it on average takes an agent half an hour to handle an issue, and she handles two issues concurrently, then her throughput would be 4 issues per hour.

The equation shows two obvious ways to increase throughput:

- Reduce the time it takes to handle each individual issue (reduce the AHT); and

- Increase the number of issues an agent can concurrently handle.

At ASAPP, we think about these two approaches to increasing throughput, particularly as customers move to adopt more asynchronous communication.

AHT as a metric is only applicable when the agent handles one contact at a time—and it’s completed end-to-end in one session. It doesn’t take into account concurrent digital interactions, nor asynchronous interactions.

Heather Reed, PhD

Reducing AHT

The first piece of the throughput-maximization problem entails identifying, quantifying, and reducing the time and effort required for agents to perform the tasks to solve a customer issue.

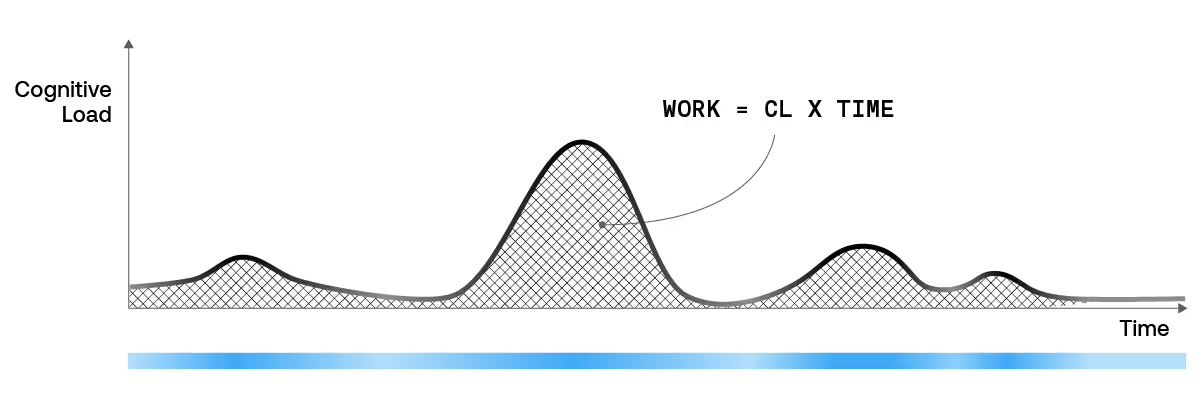

We think of the total work performed by an agent as both a function of the cognitive load (CL) and the time required to perform a task. This definition of work is analogous to the definition of work in physics, where Work = (Load applied to an object) X (Distance to move the object).

The agents’ cognitive load during the conversations (visualized by the height of the black curve and the intensity of the green bar) are affected by:

- crafting messages to the customer;

- looking up external information for the customer;

- performing work on behalf of the customer;

- context switching among multiple customers; etc.

The total work performed is the area under the curve, which can be reduced by decreasing the effort (CL) and time to perform tasks. We can compute the average across the interaction—a flat line—and in a synchronous environment, that can be very accurate.

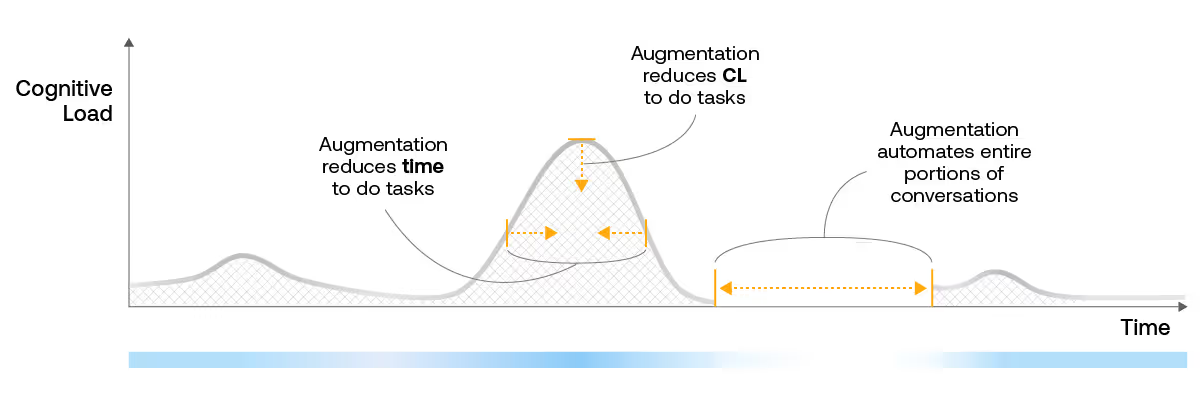

ASAPP automation and agent augmentation features are designed to both reduce handling time and reduce the agents’ cognitive load—the amount of energy it takes to solve a customers’ problem or upsell a prospect. For example Autosuggest provides message recommendations that contain relevant customer information, saving agents the time and effort they would need to spend looking up information about customers (e.g. their bill amount) as well as the time spent physically crafting the message.

For synchronous conversations, that means each call is less tiring. For asynchronous conversations, that means agents can handle an increasing number of issues without corresponding increases in stress.

In some cases, we can completely eliminate the cognitive load from a part of a conversation. Our auto-pilot feature enables automation of entire portions of the interaction—for example, collecting customer’s device information, freeing up agents’ attention.

The result of use of multiple augmentation features during an issue is the reduction of overall AHT as well as reduction of work.

When the customer is asynchronous, the majority of the agent’s time would be spent waiting for the customer to respond. This is not an effective use of the agent’s time, which brings us to the second piece of the throughput-maximization problem.

Increasing concurrency

We can improve agent throughput by increasing concurrency. Unfortunately, this is more complex than simply increasing the number of issues assigned to an agent at once. Issues that escalate to agents are complex and emotive, as customers typically get basic needs met through self-service or automation. If an agent’s concurrency is increased without forecasting workload, then increasing concurrency will actually have an adverse effect on the AHT of individual issues.

If increasing concurrency results in increased AHT, then the impact on overall throughput can be negative. What’s more customers can become frustrated at the lack of response from the agent and bounce to other support channels, or worse—consider switching providers; and agents may feel overwhelmed and risk burning out or churning out

Flexible concurrency

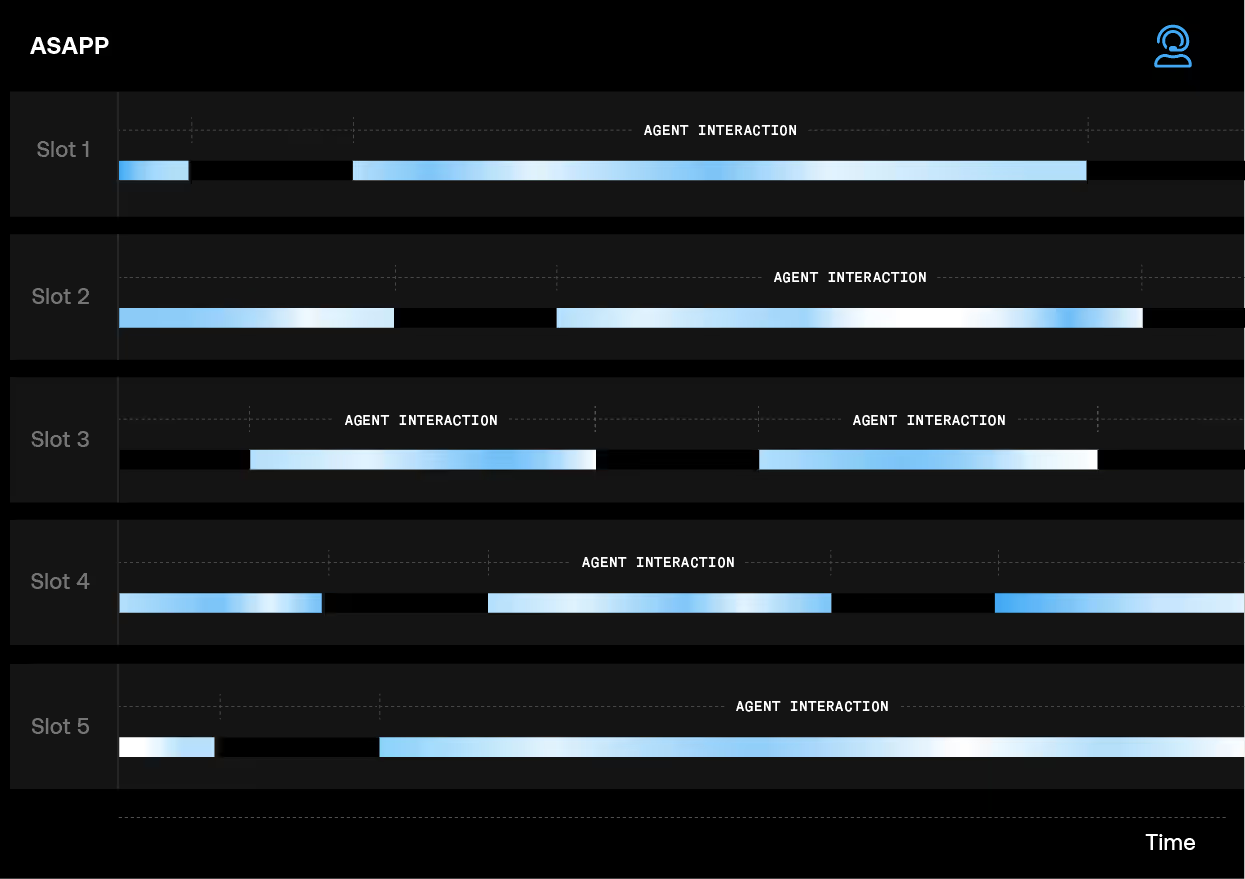

We can alleviate this problem with flexible concurrency: an AI-driven approach to this problem. A machine learning model keeps track of the work the agent is doing, and dynamically increases an agent’s concurrency to keep the cognitive load manageable.

Combined with ASAPP augmentation features, our flexible concurrency model can safely increase an agent’s concurrency, enabling higher throughput and increased agent efficiency.

In summary

As customers increasingly prefer to interact asynchronously, AHT becomes less appropriate for operations planning. Throughput (the number of issues within a time period) is a better metric to measure agent efficiency and manage workforce and operations budgets. ASAPP AI-driven agent augmentation paired with a flexible concurrency model enables our customers to safely increase agent throughput while maintaining manageable agent workload—and still deliver an exceptional customer experience.

.avif)