A bridge to the future

Talk of Generative AI transforming contact centers is everywhere. From boardrooms of the world’s largest enterprises to middle management and frontline agents, there’s a near-universal consensus: the impact will be massive. Yet, the path from today’s reality to that future vision is full of challenges.

The key to bringing customer-facing Gen AI to production today lies in a human-in-the-loop workflow, where AI agents consult human advisors whenever needed—just as frontline agents might seek guidance from a tier 2 agent or supervisor. This approach both unlocks benefits today while providing a self-learning mechanism driving greater automation in the future.

Challenges today

We've spilled our fair share of digital ink on how Generative AI marks a step change in automation and how it will revolutionize the contact center. But let's take a moment to acknowledge the key challenges that must be overcome to realize this vision.

- Safety: The stochastic nature of AI means it can "hallucinate"—producing incorrect or misleading outputs.

- Authority: Not every customer interaction is ready for full automation; certain decisions still require human judgment.

- Customer request: Regardless of how advanced an AI agent is, some customers will insist on speaking with a human agent.

- Access: Many systems used by agents today to resolve customer issues lack APIs and are thus inaccessible to Gen AI agents.

Safety: Constant vigilance

AI's ability to generate human-like responses makes it powerful, but this capability comes with risks. Gen AI systems are inherently probabilistic, meaning they can occasionally hallucinate—providing incorrect or misleading information. These mistakes, if unchecked, can erode customer trust at best and damage a brand at worst.

When evaluating a Gen AI system, the critical question to ask is not “will the system hallucinate?” It will. The critical question is, “will hallucinated output get sent to my customers?”

With GenerativeAgent® every outgoing message is evaluated by an output safety module that, among other things, checks for hallucinations. If a possible hallucination is detected, the message is assigned to a human-in-the-loop advisor for review. This safeguard prevents the dissemination of erroneous information while allowing the virtual agent to maintain control of the interaction.

In systems without this kind of human oversight, mistakes could lead to escalations or, worse, unresolved customer issues, or worse yet, damaged customer relationships or brand. By integrating a human-in-the-loop workflow, businesses can mitigate the risks inherent to this technology while maximizing the business benefits it offers.

Authority: Responsible delegation

Deploying customer-facing Gen AI in a contact center doesn’t mean relinquishing all decision-making power to the AI. There are situations where human judgment is crucial, particularly when the stakes are high for both the business and the customer.

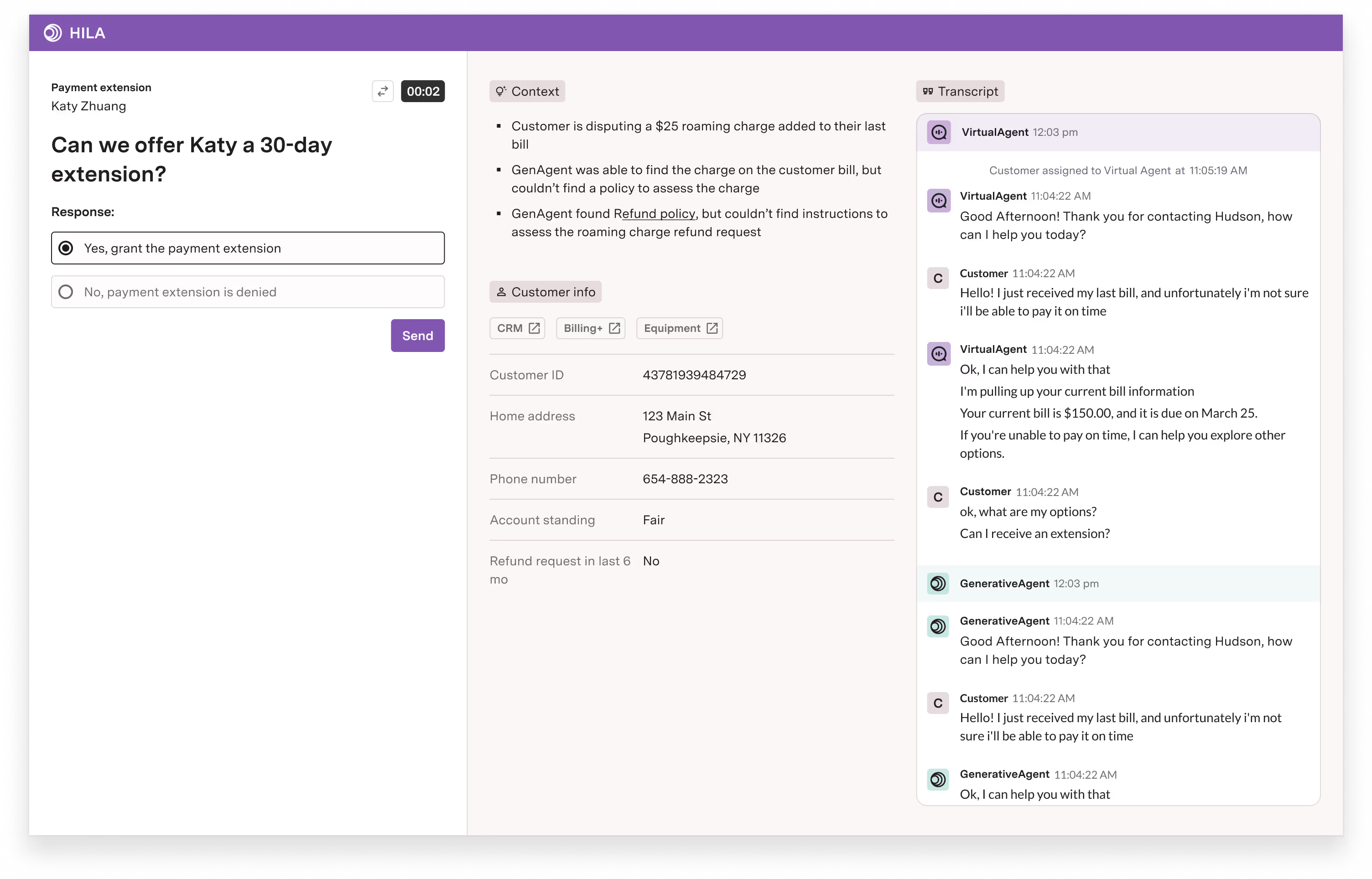

Take, for example, a customer requesting a payment extension or a final review of their loan application. These are pivotal decisions that involve weighing factors like risk, compliance, and customer loyalty. While a Gen AI agent can automate much of the interaction leading up to these moments, the final decision still requires human judgment.

With a human-in-the-loop framework, this is no longer a limitation. The AI agent can seamlessly consult an advisor for approval while maintaining control of the customer interaction, much like a Tier 1 agent consulting a supervisor. This approach provides flexibility—automating what can be automated while reserving human intervention for critical decisions. For the business, this boosts automation rates, reduces escalations, and empowers advisors to focus on high-impact judgments where their expertise is essential.

Customer request: Last ditch containment

No matter how advanced Gen AI virtual agents become, some customers will still ask to speak with a live agent—at least for now. Of all the challenges outlined, this one is the most intractable, driven by customer habits and expectations rather than technology or process.

Human-in-the-loop offers a smart alternative. Rather than instantly escalating to a live agent when requested, the Gen AI agent could inform the customer of the wait time and propose a review by a human advisor in the meantime. If the advisor can help the Gen AI agent resolve the issue quickly, the escalation can be avoided entirely—delivering a faster resolution without disrupting the flow of the interaction.

While not every customer will accept this deflection, it can significantly reduce this type of escalation and address one of the toughest barriers to full automation.

Access: Ready for today, better tomorrow

Many systems that agents rely on today lack APIs, putting a large volume of customer issues beyond the reach of automation.

Rather than dismissing these cases, a human-in-the-loop advisor can bridge this gap—handling tasks on behalf of the Gen AI agent in systems that lack APIs.

The value extends beyond expanding automation. It highlights areas where developing APIs or streamlining access to legacy systems could deliver significant ROI. What was once hard to justify becomes clear: introducing new APIs directly reduces the advisor hours spent compensating for their absence.

Conclusion

Generative AI is poised to reshape the contact center, but unlocking its full potential requires a tightly integrated human-in-the-loop workflow. This framework ensures that customer-facing Gen AI is ready for production today while providing a self-learning mechanism driving to greater automation gains tomorrow.

While AI technology continues to advance, the role of human advisors is not diminishing—it’s evolving. By embracing this collaboration, businesses can strike the right balance between automation and human judgment, leading to more efficient operations and better customer experiences.

In future posts on human-in-the-loop, we’ll dive deeper into how to enhance collaboration between AI and human agents, and explore the evolving role of humans in AI-driven contact centers.