Ethan Elenberg

Ethan Elenberg, PhD is a Research Scientist at ASAPP. Before joining ASAPP, he completed his PhD at UT Austin under the supervision of Alex Dimakis and Sriram Vishwanath. His research interests include optimization, metric learning, and interpretable AI. Ethan has a BE in Electrical Engineering from The Cooper Union and has worked at Twitter, Apple, and the MIT Lincoln Lab.

How model calibration leads to better automation

Machine learning models offer a powerful way to predict properties of incoming messages such as sentiment, language, and intent based on previous examples. We can evaluate a model’s performance in multiple ways:

Classification error measures how often its predictions are correct.

Calibration error measures how closely the model’s confidence scores match the percentage of time the model is correct.

For example, if a model is correct 95% of the time, we’d say its classification error is 5%. If the same model always reports it is 99% sure its answers are correct, then its calibration error would be 4%. Together, these metrics help determine whether a model is accurate, inaccurate, overconfident, or underconfident.

Reducing both classification error and calibration error over time is crucial for integration into human workflows. It enables us to maximize customer impact in an iterative manner. For example, well-calibrated models can trigger mature platform features to use more automation only when they have a high chance of succeeding. Furthermore, proper calibration creates an intuitive scale on which to compare multiple models, so that the overall system always utilizes the most confident prediction and that this confidence matches how well the system will actually perform.

Automating workflows requires a high degree of confidence in the ML model predicting that this is what is needed. Proper calibration increases that confidence.

Ethan Elenberg, PhD

The models developed by ASAPP provide value to our customers not from raw predictions alone, but rather from how those predictions are incorporated into platform features for our users. Therefore, we take several steps throughout model development to understand, measure, and improve the accuracy of confidence measures.

For example, consider the difference between predicting 95% chance of rain versus 55% chance of rain. A meteorologist would recommend that viewers take an umbrella with them in the former case but might not in the latter case. This weather prediction analogy fits many of the ASAPP models used in intent classification and knowledge-base retrieval. If a model predicts “PAYBILL” with score 0.95, we can send the customer to the “Pay my bill” automated workflow with a high degree of confidence that this will serve their need. If the score is 0.55, we might want to disambiguate with the customer whether they wanted to “pay their bill” or do something else.

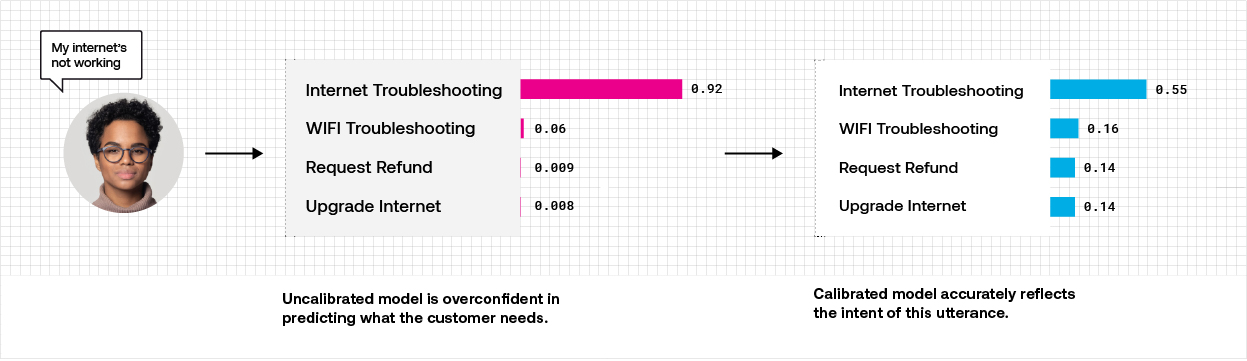

Following our intuition, we would like a model to return 0.95 when it is 95% accurate and 0.55 when it is only 55% accurate. Calibration enables us to achieve this alignment. Throughout model development, we track the mismatch between a model’s score and its empirical accuracy with a metric called expected calibration error (ECE). ASAPP models are designed with a method called temperature scaling, which adjusts their raw scores. This changes the average confidence level in a way that reduces calibration error while maintaining prediction accuracy. The results can be significant: For example, one of our temperature scaled models was shown to have 85% lower ECE than the original model.

When ASAPP incorporates AI technology into its products, we use model calibration as one of our main design criteria. This ensures that multiple machine learning models work together to create the best automated experience for our customers.

In Summary

Machine learning models can be either overconfident or underconfident in their predictions. The intent classification models developed by ASAPP are calibrated so that prediction scores match their expected accuracy—and deliver a high level of value to ASAPP customers.